About us

We are an interdisciplinary group of data science researchers at Bauhaus University advised by Prof. Maurice Jakesch.

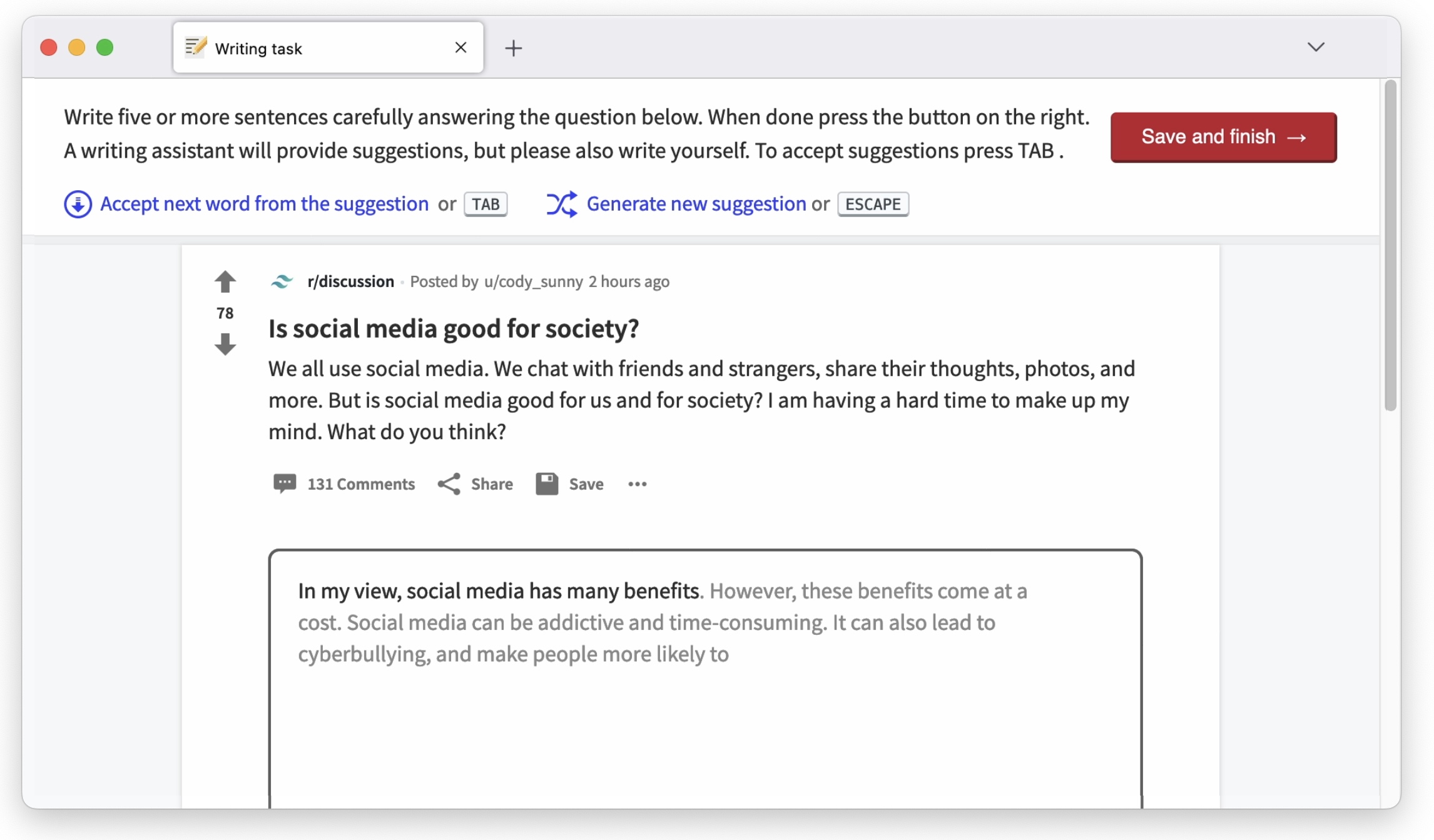

We use experiments, machine learning prototypes, and data science methods to study the impact of digital technologies and AI on society. For example, in the experiment above we test how an opinionated AI writing assistant influences users’ views. By diagnosing emerging problems and working towards early solutions, we contribute to a safer and more democratic information ecosystem.

Publications

Highlights

We are entering an era of AI-Mediated Communication (AI-MC) where interpersonal communication is not only mediated by technology, but is optimized, augmented, or generated by artificial intelligence. Our study takes a first look at the potential impact of AI-MC on online self-presentation. In three experiments we test whether people find Airbnb hosts less trustworthy if they believe their profiles have been written by AI. We observe a new phenomenon that...

If large language models like GPT-3 preferably produce a particular point of view, they may influence people’s opinions on an unknown scale. This study investigates whether a language-model-powered writing assistant that generates some opinions more often than others impacts what users write - and what they think. In an online experiment, we asked participants (N=1,506) to write a post discussing whether social media is good for society. Treatment group participants...

Recent work

Expertise

Societal Risks and Impacts of AI Systems

Our communication is increasingly intermixed with language generated by AI. While the development and deployment of large language models is progressing rapidly, their social consequences are hardly known. We work towards an understanding of the risks of large language models by empirically exploring their effects on social phenomena such as trust and opinions.

Misinformation and Political Polarization

Digital platforms are central to public discourse, yet may also facilitate the spread of misinformation and contribute to polarization. Using empirical methods, we analyze platform dynamics and user behavior to understand how digital platforms contribute to ideological divides. We aim to inform measures and policies for a healthier digital discourse.

Experiments and Computational Methods

Data-driven methods are transforming social analysis, enabling insights at unprecedented scales. We work with and refine new approaches for large-scale experimentation, causal inference, and mixed-methods analyses. In our work, we adapt concepts and tools from neighbouring disciplines, such as psychology, economics, or political science into digital research.